English is just math in prettier clothing

Word2Vec and other ways of casting English to vector form

Here’s a simple premise — neural networks know how to work on floating point numbers. English is not represented in floating point numbers (duh). Then, how do we tackle NLP problems and teach NNs to output English or other languages?

We need some way to relate words to vectors. We could start a normal count, assign Aardvak the number 0, and then go upwards. But this doesn’t capture the relationship between two words, like man and woman for example. We ideally want to capture this relationship in numbers.

Enter: embeddings. The paper we discuss today is old — all the way back from 2013. It introduced “Word2Vec”, a technique to convert words to vectors while retaining their semantic meaning.

What a classic. Let’s get into it!

Introduction and Motivation

Goal: Capture not only similar words, but multiple degrees of similarity, such that we can scale to vocabularies with millions of words, and datasets of billions.

Given linear relationships between vectors, like the following:

We try to maximize the accuracy of these vector operations by developing new model architectures.

Let’s discuss the two primary architectures below!

Development Details

Continuous Bag of Words (CBOW): Given a sentence, we attempt to predict the middle word.

Skip-gram: Given a sentence, we use the middle word and attempt to predict the surrounding words.

In the above example, the context length of each model is “5”, but it can be longer in a training model.

Evaluation

The models are analyzed 1) semantically and 2) syntactically. First, they demonstrate how the model significantly outperforms prior art in a word relationship test set.

Part of the paper’s claim is that their models would scale better. They demonstrate this using a 1000-dimension vector embedding space and 6B training words.

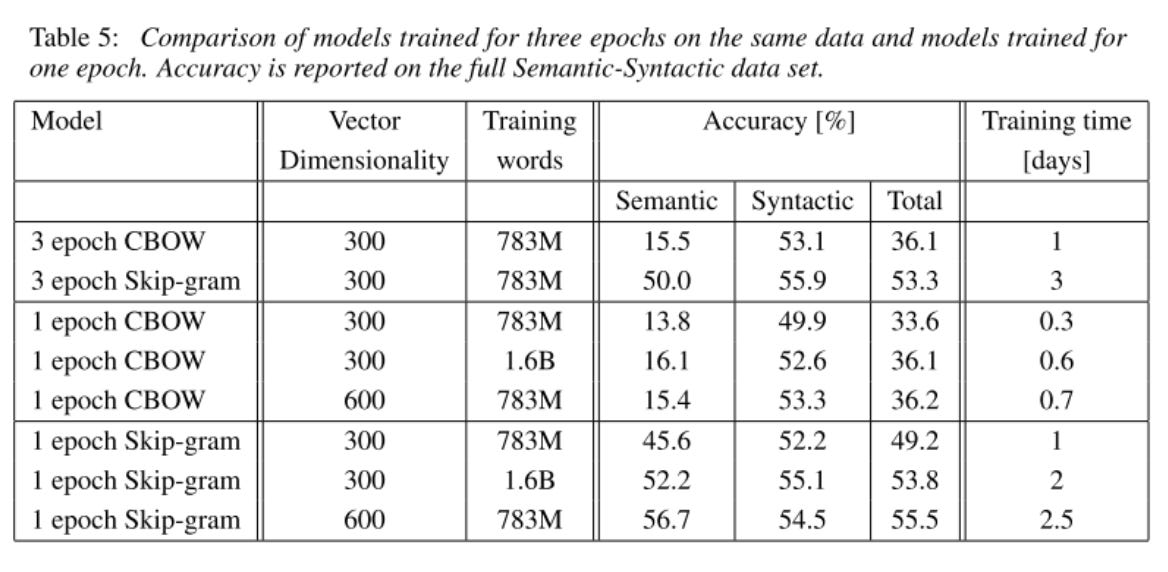

An interesting observation as well is that models trained for more epochs but lesser dimensionality do substantially better.

The impact of epochs on training accuracy remains an open question in ML research.

Limitations and Future Work

On their comparison in high-dimensional vector space against NNLM (old SOTA), they give NNLM 100-dimension space and their models 1000-dimension space. They do not justify this — I suspect something like NNLM would not be able to train a high-dimensional space like that. I would have liked to see a more just comparison.

100% accuracy is likely impossible using the given model since the current models do not have any input information about word morphology. Future work can incorporate information about the structure of words, which would specially increase syntactic accuracy.

Having been written before the attention/transformer era of neural nets, the paper uses RNNs. A transformer model with an attention block would probably do better in learning these embeddings, and has indeed been used in future work.

In summary — what an amazing paper to read. In retrospect, these ideas look so simple. You could implement word2vec in an evening if you wanted. But it’s hard to predict when scale helps a problem breakthrough, which they bet on here and succeeded. A parting thought: what other problems haven’t been scaled up yet, such that they would maybe significantly improve if they had been?

Until next time!