Models can control robots just like humans

PaLM-SayCan creates interactions between smart robots and models

Today, we’re talking robots.

Not only are we talking robots, we’re talking about talking to robots. We want a robot butler! We give it some high-level instruction (“I’m thirsty, fetch me something”) and have it figure out how to execute actions itself. Modern robots are so powerful that they can in execute the task of, say, fetching a water bottle from the fridge — but they need someone else to give them step-by-step instructions. Robots lack semantic knowledge about the world to break down given tasks into executable chunks.

You know what possesses this knowledge, though? That’s right — our good friend Large Language Models! So what if we combined them together?

Today, we're reading "Do As I Can, Not As I Say: Grounding Language in Robotic Affordances” by Robotics @ Google.

Introduction and Motivation

Goal: Use Large Language Models to instruct a robot to perform a specific task (“Get me a can of coke”)

Constraints: LLMs have one core, glaring problem — they hallucinate. They are not grounded in the physical world and do not observe the consequences of their generations on any physical process. Thus they make mistakes that seem unreasonable, and might interpret instructions in impossible ways.

For example, if the user prompt is “I spilled some chips on the floor help”, the LLM might say “get a vacuum cleaner”, when there might not exist a vacuum cleaner in the apartment at all. How do we give the model an understanding of what’s reasonable.

Solution: Let the robot explain what it can and can’t do. The robot is given a repository of “atomic” behaviors it is capable of. Each skill has an affordance function that quantifies how likely it is to succeed from the current state.

Development Details

The technique of this LLM-Robot interaction is called SayCan.

The robot used is a mobile manipulator from Everyday Robots with a 7-degree-of-freedom arm and a gripper. It’s the cutie you saw earlier. Here it is again!

The authors use RL as a way to learn language-conditioned value functions for affordances for what is possible in the real world. They use human rates to analyze the success of a language command by watching a video — reward value of 1.0 if successfully completed and 0.0 otherwise.

While they use the model to generate actions for the robot, they also use it as a “likelihood calculator”. A task that is more likely according to the LLM will have a higher log likelihood as well.

The joint probability of the affordance and the log likelihood give the success probability of a given task.

Evaluation

The flexibility of the LLM and the grounding of the robot together create a regularization effect on the LLM, as you see in the example below:

Most of the evaluation of the model is split into two categories — the “plan” and “execute” phase.

Tasks increase in difficulty — as we can see, the plan phase starts very strong, and stays consistently above average performance. The bottleneck does seem to be the robot itself — either in what it can do, or what it says it can do.

They also employ chain of thought reasoning to allow the LLM to come up with a serialized list of tasks for the robot.

Limitations and Future Work

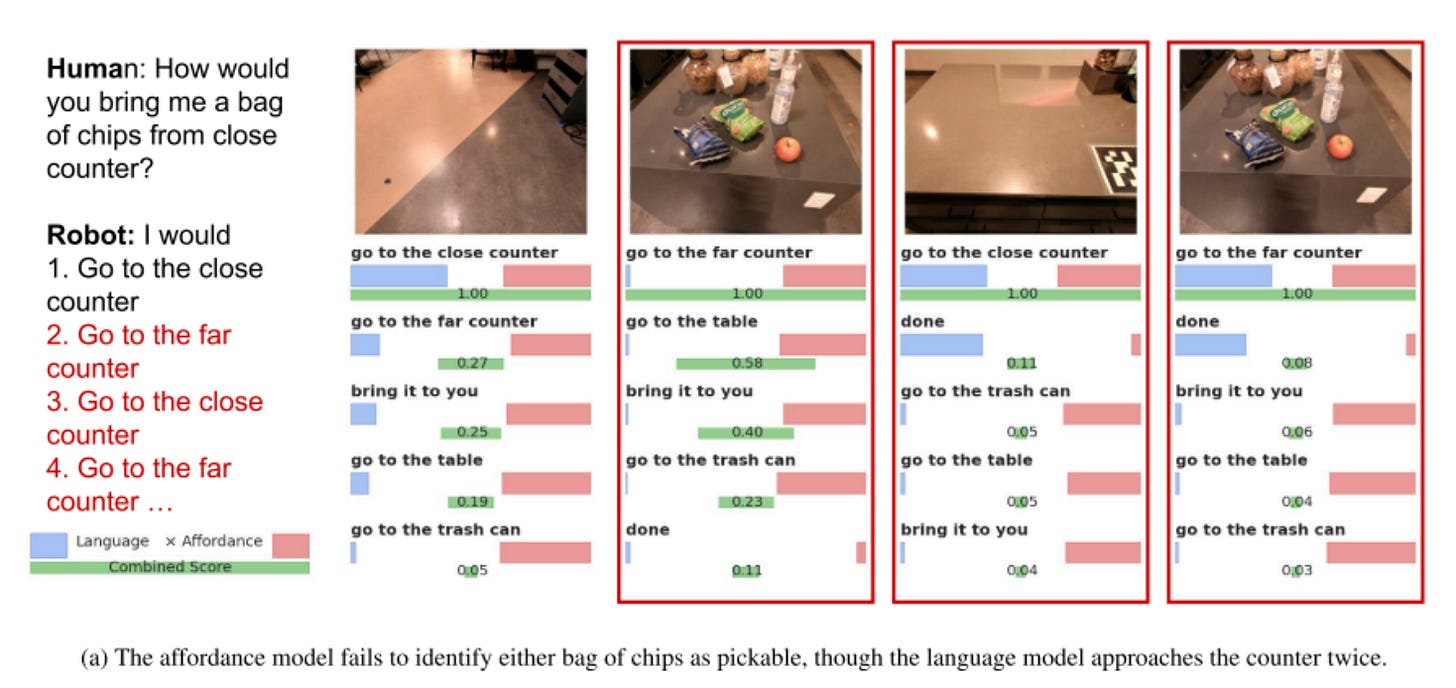

As the paper itself mentions as well — the primary bottleneck is in the range and capabilities of the underlying skills of the robot. Here’s one in which the model doesn’t identify objects for the LLM to use:

And similarly, here’s an example where the LLM suggests the right action but the affordance model deems it impossible.

This makes sense as being the bottleneck, however — the affordance model is trained by humans rating videos, which does not scale. I don’t think the current technique is a viable method to scale, and I look forward to what self-supervised or semi-supervised RL ideas future work can come up with.

The system is also not robust — it does not react to situations where individual skills fail despite reporting a high value.

Interesting future work is possible in exploring how grounding the LLM via real-world robotic experience can be leveraged to improve the LM itself.

In Summary

For being the first attempt to bring LLMs to the real world, SayCan is a wonderfully simple idea. There is high interpretability in the robot’s actions, and it leverages the casual nature of LLMs extremely well.

There’s two really cool insights from this paper — the first being that grounding large language models in the real world (or equivalent settings like factual truth) makes them more powerful, robust and dependable, and second that LLMs can and will be used not only for conversation but also for action.

Now we just need to let an LLM drive a car.

Until next time!