Training Compute Optimal Large Language Models

NeurIPS Outstanding Paper 2022, Deepmind

If you’ve ever heard of the “Chinchilla” paper in online AI conversations, look no further — this is what they’re talking about.

Introduction and Motivation

Goal: In machine learning in production, we typically have a set number of FLOPS. FLOPs stand for “floating point operations per second”. It is a unit of compute. Given a set number of FLOPs, how does one trade-off parameter count (model size) and token count (data size)?

Previous research: (Kaplan, OpenAI 2020 [2]) suggested scaling laws that valued parameter count much over token count. This is why model size exploded in the past ~3 years.

Core Insight: Empirical evidence in this paper suggests that parameter counts should be scaled at an equal number with token counts.

Implication: Most modern large language models are under-trained.

The Data That Led to This Conclusion

Having trained ~400 language models, here’s what the data says:

First, they demonstrate that:

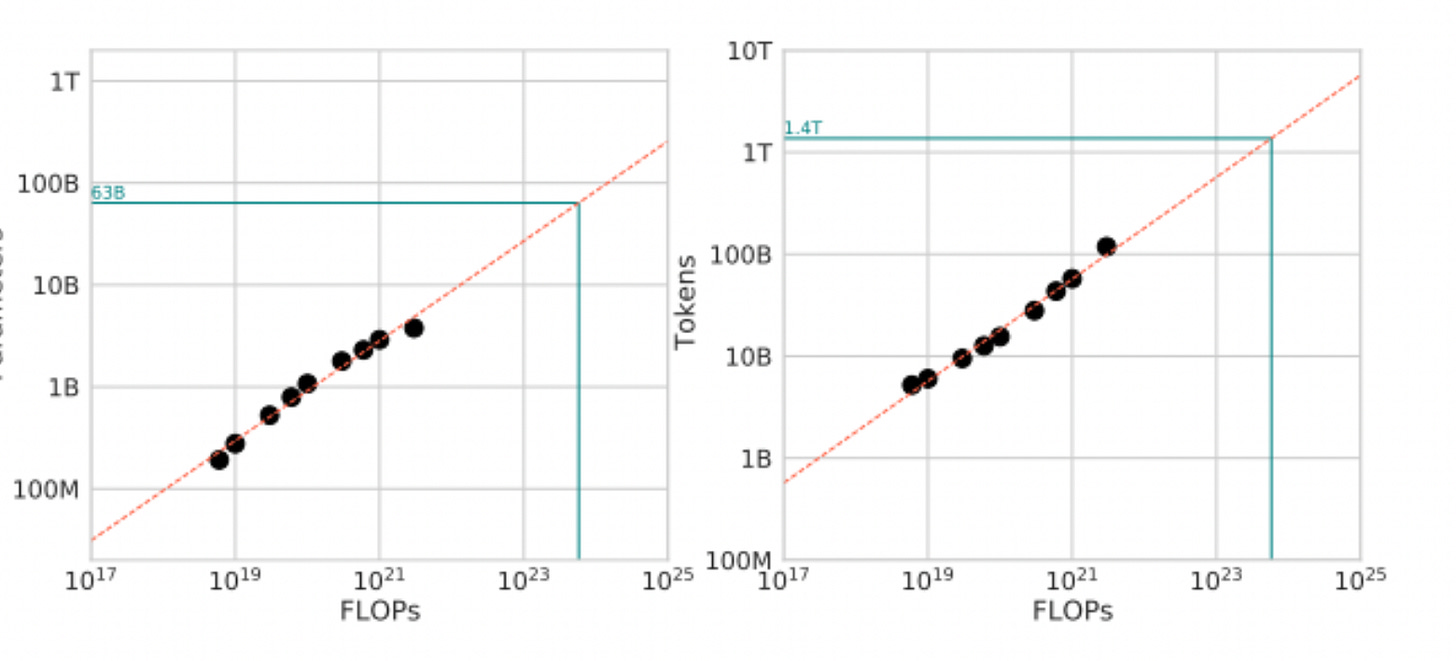

Holding tokens constant, the optimal performance in terms of parameters increases linearly as the compute budget increases.

Holding parameters constant, the optimal performance in terms of tokens increases linearly as the compute budget increases.

Here, optimal performance = min training loss.

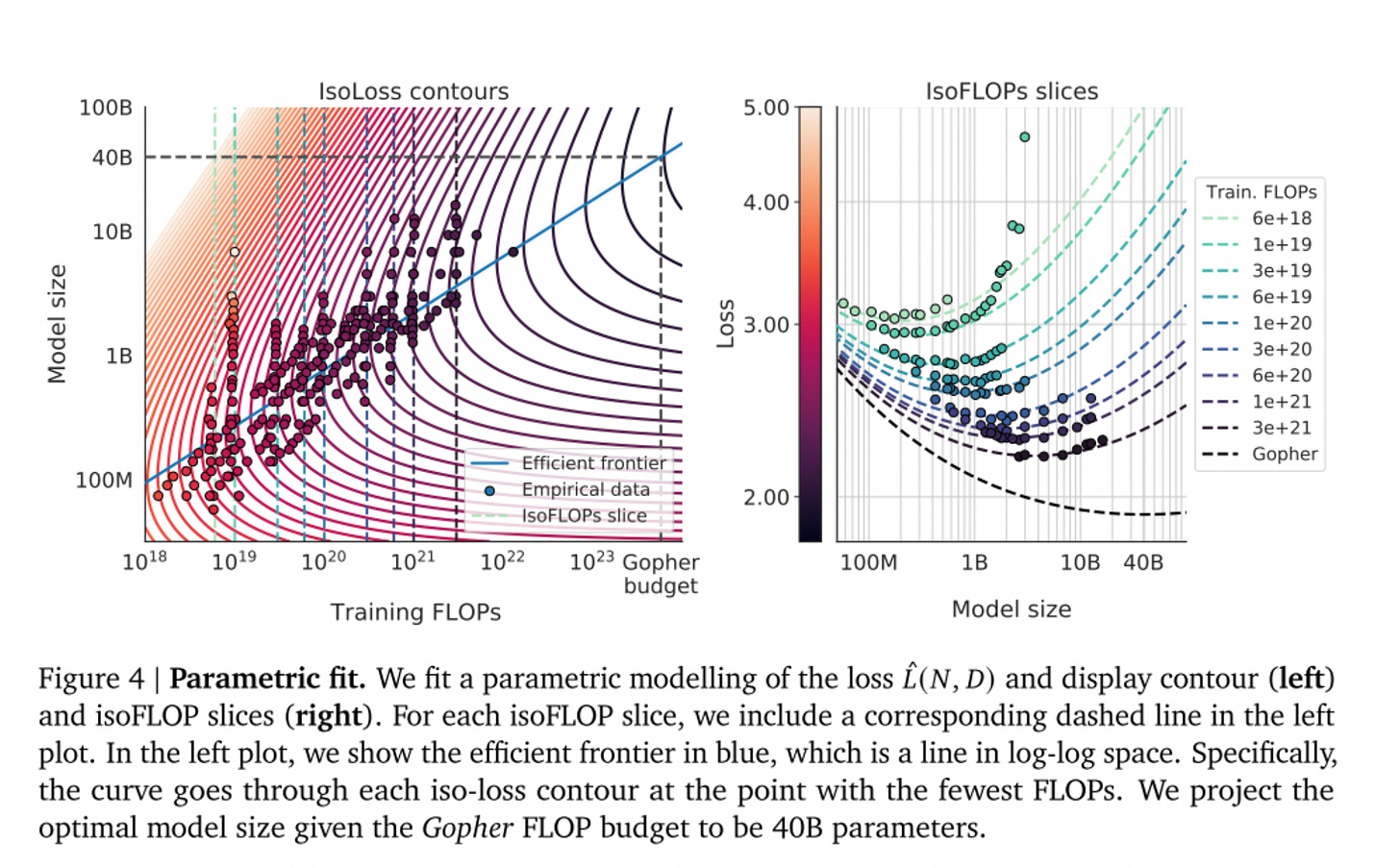

These ISO-loss contours clearly demonstrate a symmetry in FLOPs and model size. A model with 1B parameters could have loss equivalent to a model with 100M parameters, given enough training FLOPs (and tokens).

Finally, they train a 70B param model Chinchilla, which is a smaller but much-more trained equivalent to the 280B Gopher. They demonstrate that, at this optimality, Chinchilla outperforms Gopher and its equivalent models. Here it is on MMLU, a classic LLM benchmark:

Future work: The paper did not consider models trained for multiple epochs. Recent success of models like Galactica [3] imply the value of multi-epoch training.

References

[1] Paper link: https://arxiv.org/abs/2203.15556