Quadratic Complexity holds back the legendary transformer (Part 2)

Removing the attention block altogether

Last week, we started our series on understanding the fundamental limitations of the transformers. We talked about the quadratic complexity of the attention mechanism at the heart of the transformer, which was limiting context length. We discussed kernalizing as well as leveraging external memory as ways to improve the attention model’s runtime.

Today, we take a step back and ask: Is attention even the right mechanism for a model like this? If one day we want to attend to millions of tokens (like, for example, an entire book), would these system optimizations ever get us there? The simple answer— no.

Instead, we consider leveraging ways to process a discrete sequence from other domains like signal processing, and look into structured state spaces. What are structured state spaces?

Very simply, they are feedback loops. They accept inputs (u) and emit outputs (y), while maintaining a mapping through some internal state (x). To those familiar with neural architectures, this should sound very close to what an encoder does, where x is some hidden state.

That’s partly because machine learning owes some of its greatest ideas to signal processing. Today, we discuss another pretty great idea. We’re talking about “S4: Structured State Spaces For Sequence Modelling”.

Introduction and Motivation

Goal: Encode self-attention through structured state spaces, to be able to compute attention-like systems in sub-quadratic time.

Constraints: SSMs are closer to RNNs or LSTMs than they are to attention — state comes in, new state comes out, loop around. This means that they might even be worse than attention at runtime complexity (😱).

Core Insight: Structured state spaces can be represented as convolution through use of a special kernel SSM Convolution Kernel. If the SSM can be represented as a convolution, it can be parallelized, executed in linear time, and it can be optimized.

Development Details

Finding the SSM Convolution Kernel is not a trivial task. The core contribution of the paper centers around being able to efficiently find the kernel. There is a lot of math in this section, and I will not go over all of it here. Instead, here’s a few very interesting highlights:

One of the core ways to make the SSM efficient is to represent it as a HiPPO matrix: this matrix aims to compress the past history into a state that has enough information to approximately reconstruct the history.

The algorithm is designed such that it can skip the materialization of the hidden state at test time, which saves a lot of I/O cost and allows for batching.

All of this amounts to improved time complexity — S4 has the best time complexity across the board, through training and inference time, and in parameter counts.

Evaluation

Let’s jump straight into looking at some data! First, we see that S4 is very memory efficient, even if it is often slower than the transformer model.

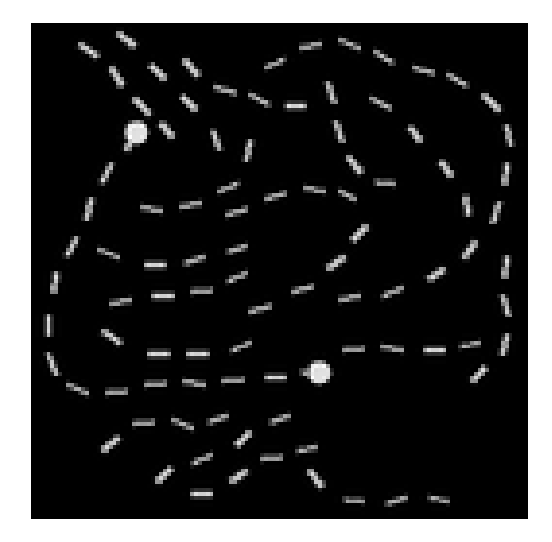

Another very famous large model test is the “Pathfinder” benchmark. It looks something like this:

The model must declare whether the two markers are connected by a path or not. It is supposed to model long-range dependencies. S4 crushes it at long-range dependency tests.

Similarly, S4 performs quite well at generative models in language, video, audio or art.

If we take a look at the design’s ablation studies, we see that for validation accuracy, every component is needed.

Limitations and Future Work

The S4 operation only right now works on 1-dimensional input. This means that, when acting as a layer of k-dimensional embeddings, for example, they create k SSMs, then use a linear layer + an activation to mix them together. Future work could investigate how to make the SSM multi-dimensional.

S4, for all its greatness, is still beat by the Transformer. The Transformer benchmark does generative text better, and is also faster at training time. This is critical in large-scale training.

Exciting future work could explore how all these techniques could complement one another — could SSMs and attention be combined together, in the style of a memorizing transformer?

In Summary

I complain a lot about the state of AI research — OpenAI refuses to publish papers, it’s all about scaling, no one’s doing actual science blah blah blah. But papers like these still exist out there — even if they aren’t nearly as possible as the scaling papers. Taking a core idea from another domain, remixing and applying to deep learning, fundamentally improving architecture. This is what great research is all about!

Instead of giving you my thoughts, I leave you today with a reading list. S4 is an amazing paper, by some very cool people at Stanford. Check out their work, that they painstakingly present in accessible and educational form!

References

Until next time!